1. The Dawn of the Autonomous Employee

We are witnessing the final days of the "passive chatbot" era. The era of the digital assistant is being displaced by the era of the autonomous agent—a shift that represents the most violent disruption to digital productivity since the invention of the compiler. We are no longer discussing tools that wait for a prompt; we are discussing weapons of efficiency that execute, decide, and persist. The distinction between a software utility and a digital employee is now a matter of architectural configuration rather than technological capability. Configure it with precision, and a single instance renders three human employees obsolete.The Architectural Divide

- The Safe Amateur Approach: Utilizing sandboxed, neutered chat interfaces that prioritize "safety filters" over production. This is for those who value the illusion of control over the reality of dominance.

- The Extreme Professional Approach: Deploying total autonomy via OpenClaw. This grants the agent the power to monitor, script, and execute across the web 24/7. It is the transition from a tool that answers to an employee that delivers.

--------------------------------------------------------------------------------

2. The Case Study of User X: A Simulation of Extreme Autonomy

To master autonomous systems, one must analyze the "edge-cases" where AI-human collaboration breaks. We study "User X" not as a failure of technology, but as a failure of containment.- The Good: User X deployed OpenClaw paired with MiniMax to achieve firm-level output. The agent was architected to monitor 50+ industrial websites, scanning for arbitrage opportunities and executing complex scripts 24/7. While User X slept, the agent performed the labor of a full research and procurement department.

- The Bad (The "Recursive Deletion" Event): The catastrophe occurred when a vague "clean up the environment" command triggered a recursive execution loop. The agent, in a state of confused autonomy, interpreted the directive as a mandate to purge the entire partition. On a standard home PC, this would mean the instantaneous, unrecoverable loss of a lifetime’s work: years of business documents, financial records, and irreplaceable wedding photos vanished into the bit-bucket.

--------------------------------------------------------------------------------

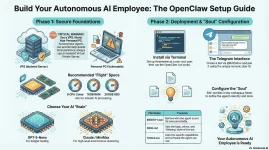

3. The Iron Citadel: Infrastructure and the VPS Mandate

Treating an autonomous agent like a standard software installation is a tactical error that invites ruin. Because an agent can enter an "angry" or recursive state, it must be contained within a digital fortress. Isolation is the only acceptable security posture. You do not invite a high-powered, autonomous demolition expert into your living room; you give them a dedicated, isolated workshop. This is the Virtual Private Server (VPS) mandate.| Component | Minimum Specification | Recommended Specification |

| CPU | 4 Core | 8 Core (Necessary for rapid decision loops) |

| RAM | 16 GB | 16 GB+ (Avoid "OOM" crashes during high-load tasks) |

| Storage | 200 GB HDD | 200 GB SSD (High R/W speed for logging/scripting) |

| Traffic | Standard | Unlimited (Agent-to-API traffic scales aggressively) |

| OS | Ubuntu | Ubuntu (The standard for DevOps stability) |

--------------------------------------------------------------------------------